Docker Build: Beginner’s Guide to Building Docker Images Docker

has changed the way we create, package, and deploy applications. But this concept of containerizing applications isn’t new—it existed long before Docker.

Docker has just made container technology easy for people to use. This is why Docker is a must-have in most development workflows today. Chances are, your dream company is using Docker right now.

The official Docker documentation has many moving parts. Honestly, it can be overwhelming at first. You might find yourself needing to get information here and there to build that Docker image you’ve always wanted to build.

Maybe creating Docker images has been a daunting task for you, but it won’t be after reading this post. Here, you’ll learn how to create (and how not to create) Docker images. You’ll be able to write a Dockerfile and publish Docker images like a pro.

Install

Docker

First, you’ll need to install Docker. Docker runs natively on Linux. That doesn’t mean you can’t use Docker on Mac or Windows. In fact, there is Docker for Mac and Docker for Windows. I won’t go into detail on how to install Docker on your machine in this post. If you’re on a Linux machine, this guide will help you get Docker up and running.

Now that you have Docker set up on your machine, you’re one step closer to creating images with Docker. Chances are, you’ll come across two terms, “containers” and “images,” which can be confusing.

Docker images and containers

Docker containers

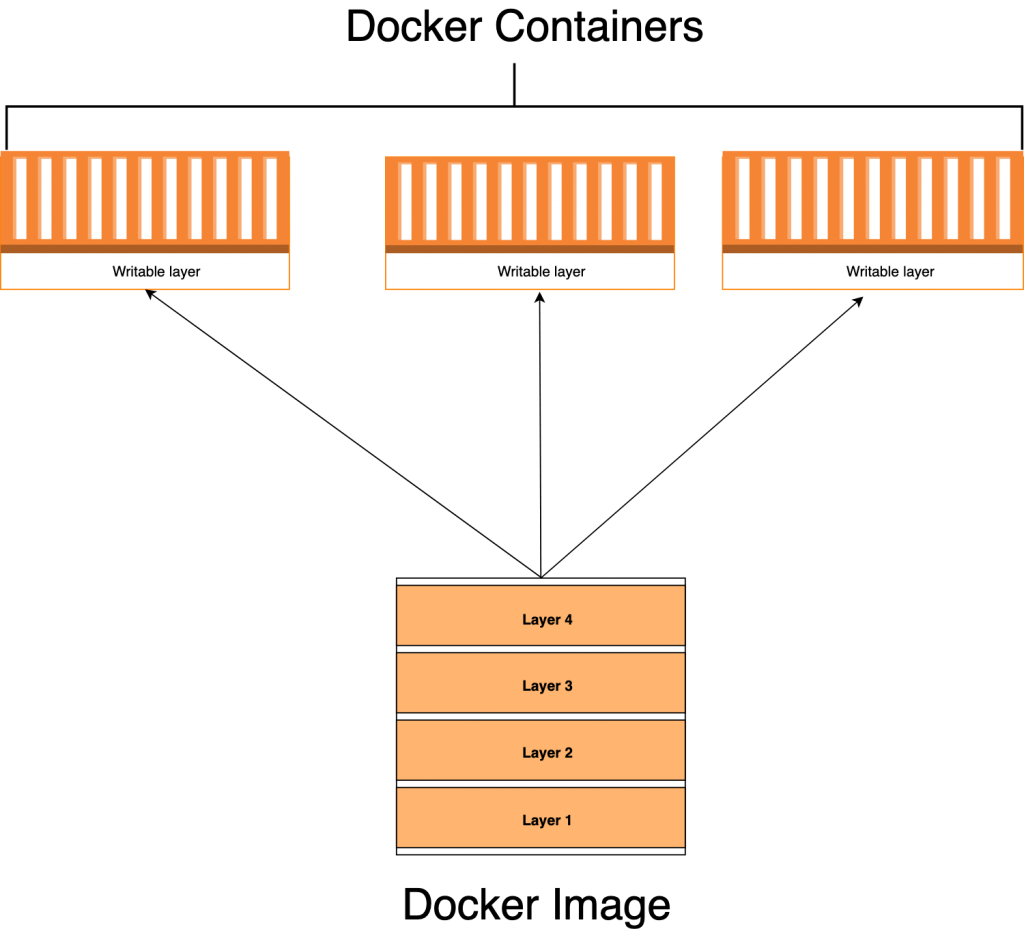

are instances of Docker images, either running or stopped. In fact, the main difference between Docker containers and images is that containers have a writable layer.

When you create a Docker

container, you add a writable layer on top of the Docker image. You can run many Docker containers from the same Docker image. You can view a Docker container as an instance of a Docker image.

Creating the first Docker

image

It’s time to get our hands dirty and see how Docker build works in a real-life application. We will generate a simple Node .js application with an Express application generator. The Express generator is a CLI tool used for Express scaffolding applications. After that, we’ll go through the process of using Docker build to create a Docker image from the source code.

We start by installing

the express generator as follows: $ npm install express-generator -g

Next, we scaffold our application using

the following command: $ express docker-app

Now we install package dependencies

: $ npm install Start the application with the

following command:

$ npm start

If you point your browser to http://localhost:3000 , you should see the default page for the app, with the text “Welcome to Express.”

Dockerfile

Mind you, the application is still running on your machine and you don’t have a Docker image yet. Of course, there are no magic wands you can wave in your app and turn it into a Docker container all of a sudden. You have to write a Dockerfile and build an image from it.

Official Docker documents define Dockerfile as “a text document containing all the commands a user might call on the command line to assemble an image.” Now that you know what a Dockerfile is, it’s time to write one.

In the root directory of the application, create a file named “Dockerfile”.

$ touch

Dockerfile Dockerignore

There’s one important concept you need to internalize: always keep your Docker image as thin as possible. This means packaging only what your applications need to run. Please don’t do the opposite.

Actually, the source code usually contains other files and directories such as .git, .idea, .vscode or travis.yml. These are essential to our development workflow, but they won’t stop our application from running. It’s good practice not to have them in your image, that’s what . Dockerignore is for. We use it to prevent such files and directories from making their way into our build.

Create a file named . dockerignore in the root folder with this content:

.git .gitignore node_modules npm-debug.log Dockerfile* docker-compose* README.md .vscode LICENSE The Dockerfile base image typically starts from

a base image. As defined in the Docker documentation, a base image or master image is where the image is based. It’s your starting point. It could be an operating system Ubuntu, Redhat, MySQL, Redis, etc.

Base images don’t just fall from the sky. They are created, and you too can create one from scratch. There are also many base images you can use, so you don’t need to create one in most cases.

We add the base image to Dockerfile using the FROM

command, followed by the name of the base image

: # File name: Dockerfile FROM node:10-alpine

Copying source code

Let’s instruct Docker to copy our source during Docker build

: # Filename: Dockerfile FROM node:10-alpine WORKDIR /usr/src/app COPY package*.json ./ RUN npm install COPY. .

First, we configure the working directory using WORKDIR. Then we copy the files using the COPY command. The first argument is the source path and the second is the destination path in the image file system. We copy package.jsonand install our project’s dependencies using npm install. This will create the node_modules directory that we once ignored in . Dockerignore.

You might be wondering why we copied package.json before the source code. Docker images are made up of layers. They are created based on the output generated by each command. Since the package.json file doesn’t change often like our source code, we don’t want to keep rebuilding node_modules every time we run the Docker build.

Copying over the files that define the dependencies of our application and installing them immediately allows us to take advantage of the Docker cache. The main benefit here is faster build time. There’s a really nice blog post that explains this concept in detail.

Want to improve your code? Try Stackify’s free code profiler, Prefix, to write better code on your workstation. Prefix works with .NET, Java, PHP, Node.js, Ruby and Python.

Exposing a

port

Exposing port 3000 informs Docker on which port the container is listening on at run time. Let’s modify the Docker file and expose port 3000.

# File name: Dockerfile FROM node:10-alpine WORKDIR /usr/src/app COPY package*.json ./ RUN npm install COPY. .

EXPOSE

3000 Docker CMD

The CMD command tells Docker how to run the application we package in the image. The CMD follows the CMD format [“command”, “argument1”, “argument2”].

# File name: Dockerfile FROM node:10-alpine WORKDIR /usr/src/app COPY package*.json ./ RUN npm install COPY. . EXPOSE 3000 CMD [“npm”, “start”]

Creating Docker images

With Dockerfile written, you can build the image using the following command:

$docker build.

>

We can see the image we just built using the docker images command. $ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE <none> <none> 7b341adb0bf1 2 minutes ago 83.2MB

Tagging a Docker image

When you have a lot of images, it becomes difficult to know which image is what. Docker provides a way to tag your images with descriptive names of your choice. This is known as labeling.

$ docker build -t yourusername/repository-name .

Let’s proceed to tag the Docker image we just built.

$ docker build -t yourusername/example-node-app

If you run the above command, you should already have your image tagged. When you run Docker images again, the image will be displayed with the name you chose.

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE abiodunjames/example-node-app latest be083a8e3159 7 minutes ago 83.2 MB

Running

a Docker image

Run a Docker image using the docker run API.

The command is as follows: $ docker run -p80:3000 yourusername/example-node-app

The command is quite simple. We provide the -p argument to specify on which port on the host machine to map the port on which the application is listening in the container. You can now access your app from your browser on https://localhost.

To run the container in a separate mode, you can provide argument

-d:$ docker run -d -p80:3000 yourusername/example-node-app

Congratulations! You’ve just packaged an app that can run anywhere Docker is installed.

Push a Docker image to the Docker

repository

The Docker image you created still resides on the local machine. This means you can’t run it on any other machine outside of your own, not even in production! To make the Docker image available for use elsewhere, you must push it to a Docker registry.

A Docker registry

is where the Docker images reside. One of the popular Docker registries is Docker Hub. You’ll need an account to push Docker images into Docker Hub, and you can create one here.

With your Docker Hub credentials ready, you just need to log in with your username and password.

$ docker login

Retag the image with a version number

: $ docker tag yourusername/example-node-app yourdockerhubusername/example-node-app:v1

Then press

with the following: $ docker push abiodunjames/example-node-app:v1

If you’re as excited as I am, you’ll probably want to poke your nose into what’s happening in this container, and even do cool stuff with Docker API.

You can list Docker containers:

$ docker ps And you can

inspect a container

: $ docker inspect <container-id> You can view Docker

logs

in a Docker container: $docker logs <container-id>

And you can stop a running container:

$docker stop <container-id>

Logging and monitoring are just as important as the application itself. You should not put an application into production without proper registration and monitoring, no matter the reason. Retrace provides first-class support for Docker containers. This guide can help you configure a backspace agent.

The whole

concept of containerization is about taking the pain out of building, shipping, and running applications. In this post, we’ve learned how to write Dockerfile, as well as create, tag, and publish Docker images. Now is the time to leverage this knowledge and learn how to automate the entire process using continuous integration and continuous delivery. Here are some good posts on setting up continuous integration and delivery pipelines to get you started:

- What is CICD? What’s important and how to get it right

- How Kubernetes can improve your CI/CD

- Creating a continuous delivery pipeline with Git & Jenkins

pipeline